Somehow related to what I was writing here The Internet and .txt files in the era of AI, ML and data scrapping, I am receiving more often notifications of unusual server loads on my infrastructure. A short search through the logs and we can see different bots and scrappers indexing a lot of data.

While this is not illegal it still raises some concerns about user generated data used for private companies and even in commercial products without consent. We are currently in a wild west scenario and even if most of the companies don't have a malicious direction, there can still be issues.

We are watching an exponential increase in AI companies, almost any script kiddie can write a PHP or Python script to start scrapping the web. Add this to the massive infrastructure rented by the AI start-ups and if you are unlucky and your infrastructure is not able to deal with this then you are facing a DDoS.

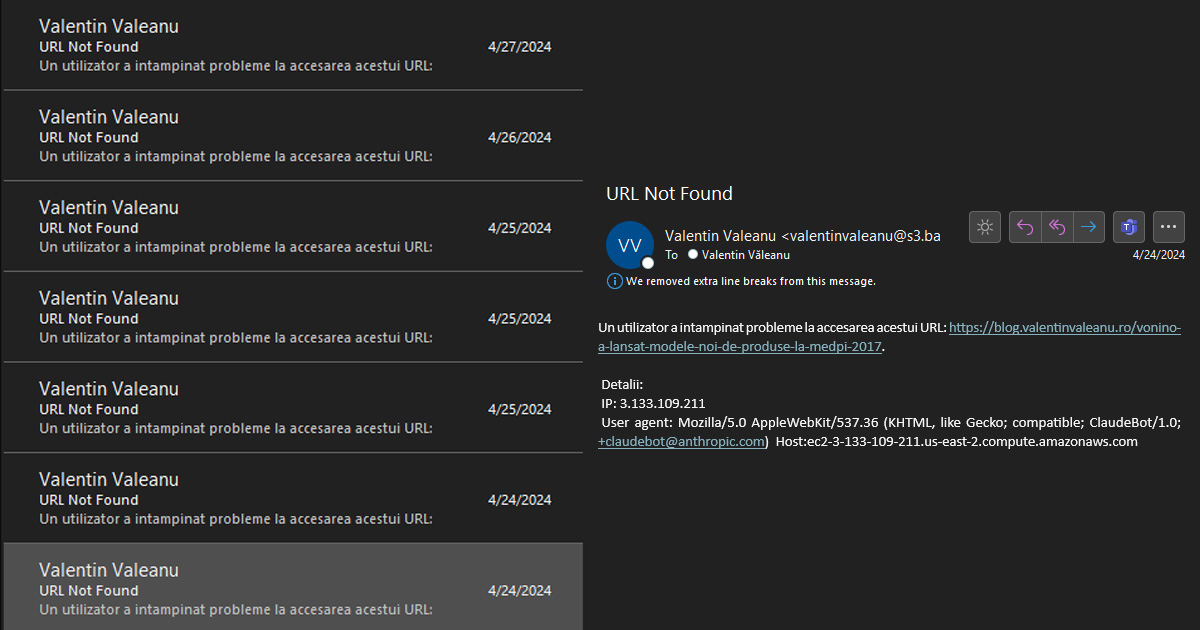

I don't want to put the finger on Claude but they were the ones I found in the logs first. It is not about Claude and they do a good job by properly identifying their agent.

But how about the rest of the crawlers, they might not respect robots.txt, they might not properly identify and provide methods to limit their access. How should we deal with the exponential increase in scrappers? The server admins will now need to daily search the logs and update robots.txt or the firewall to block all the new crawlers? The owners will need to pay more for increased infrastructure resources to deal with all this artificial visits. The traffic analytics will have a bad day trying to filter the human visits from the crawlers.

Just a few examples:

- Anthropic’s ClaudeBot is aggressively scraping the Web in recent days

- Why doesn't ClaudeBot / Anthropic obey robots.txt?

- Claudebot attack

This is why we can't have nice things and we have now the double opt-in and the cookie banner.

Cloudflare has the option to block AI scrappers and they wrote a blog post Easily manage AI crawlers with our new bot categories.

But a third-party service should not be the solution to this ...