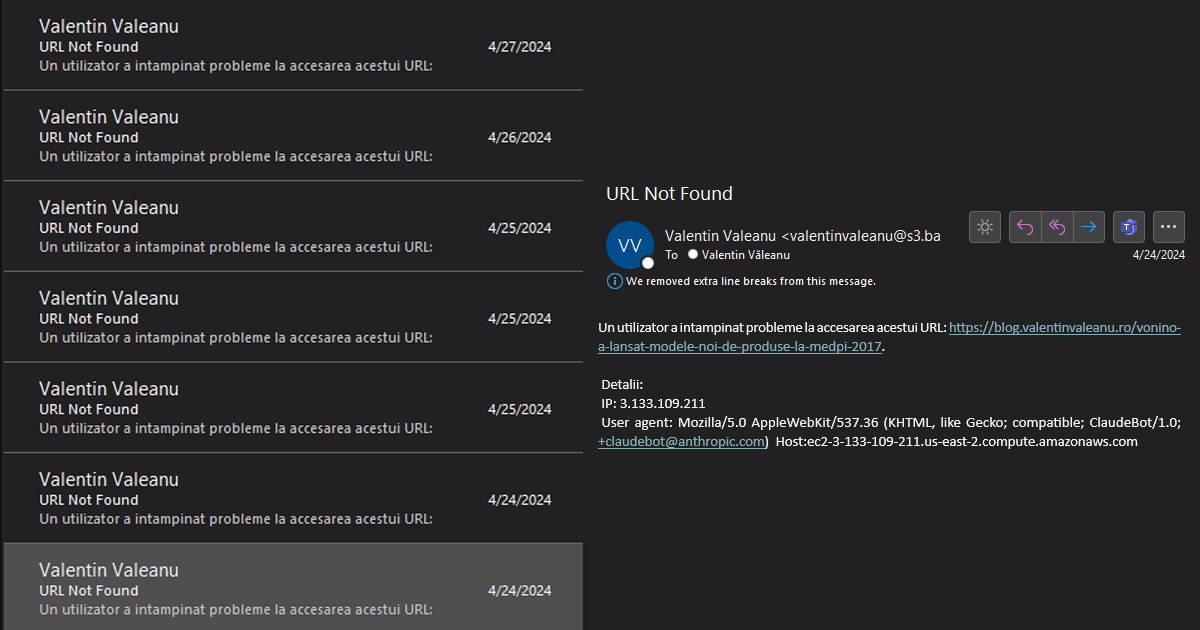

I'm exploring replacing the WordPress search engine with an AI-powered search that uses AI models to better understand human queries beyond simple keywords, delivering more natural and comprehensible responses. After indexing and embedding my content using text-embedding-ada-002 in the Azure AI Search Service index and performing some queries, I noticed that the query responses varied […]