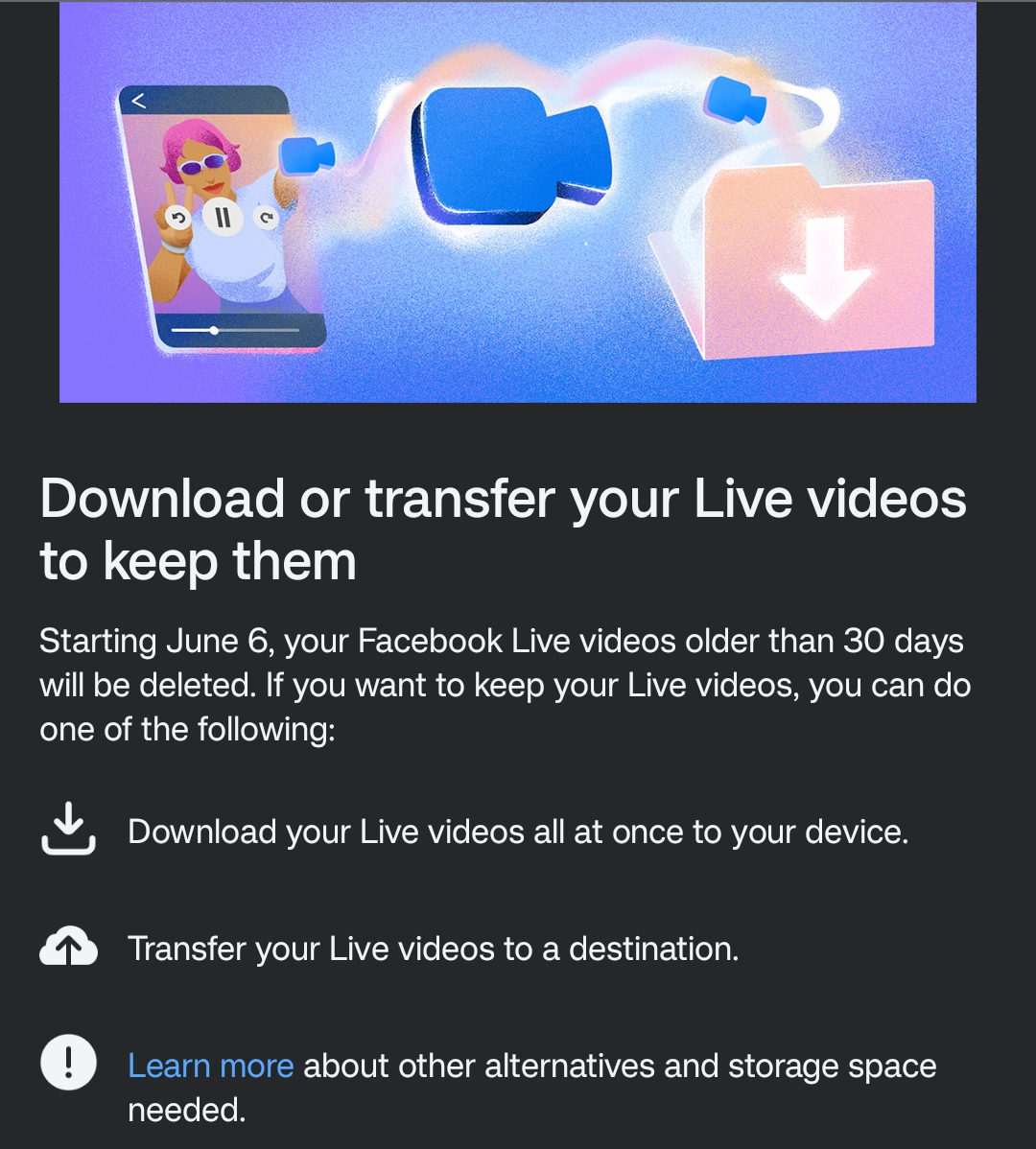

Let's be honest: most of the time, using a website's search function is a source of frustration. It often involves endless scrolling and multiple page changes to find what you're actually looking for. I’m not even talking about searching by post category, date, or "God forbid" the author. This is because a typical search function […]